Jacobian matrix and determinant

| Topics in Calculus | ||||||||

|---|---|---|---|---|---|---|---|---|

| Fundamental theorem Limits of functions Continuity Mean value theorem

|

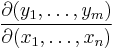

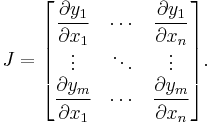

In vector calculus, the Jacobian matrix is the matrix of all first-order partial derivatives of a vector- or scalar-valued function with respect to another vector. Suppose F : Rn → Rm is a function from Euclidean n-space to Euclidean m-space. Such a function is given by m real-valued component functions, y1(x1,...,xn), ..., ym(x1,...,xn). The partial derivatives of all these functions (if they exist) can be organized in an m-by-n matrix, the Jacobian matrix J of F, as follows:

This matrix is also denoted by  and

and  . The i th row (i = 1, ..., m) of this matrix is the gradient of the ith component function yi:

. The i th row (i = 1, ..., m) of this matrix is the gradient of the ith component function yi:  .

.

The Jacobian determinant (often simply called the Jacobian) is the determinant of the Jacobian matrix.

These concepts are named after the mathematician Carl Gustav Jacob Jacobi. The term "Jacobian" is normally pronounced /dʒəˈkoʊbiən/, but sometimes also /jəˈkoʊbiən/.

Contents |

Jacobian matrix

The Jacobian of a function describes the orientation of a tangent plane to the function at a given point. In this way, the Jacobian generalizes the gradient of a scalar valued function of multiple variables which itself generalizes the derivative of a scalar-valued function of a scalar. Likewise, the Jacobian can also be thought of as describing the amount of "stretching" that a transformation imposes. For example, if  is used to transform an image, the Jacobian of f,

is used to transform an image, the Jacobian of f,  describes how much the image in the neighborhood of

describes how much the image in the neighborhood of  is stretched in the x, y, and xy directions.

is stretched in the x, y, and xy directions.

If a function is differentiable at a point, its derivative is given in coordinates by the Jacobian, but a function doesn't need to be differentiable for the Jacobian to be defined, since only the partial derivatives are required to exist.

The importance of the Jacobian lies in the fact that it represents the best linear approximation to a differentiable function near a given point. In this sense, the Jacobian is the derivative of a multivariate function. For a function of n variables, n > 1, the derivative of a numerical function must be matrix-valued, or a partial derivative.

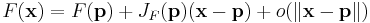

If p is a point in Rn and F is differentiable at p, then its derivative is given by JF(p). In this case, the linear map described by JF(p) is the best linear approximation of F near the point p, in the sense that

for x close to p and where o is the little o-notation (for  , not

, not  ) and

) and  is the distance between x and p.

is the distance between x and p.

In a sense, both the gradient and Jacobian are "first derivatives" — the former the first derivative of a scalar function of several variables, the latter the first derivative of a vector function of several variables. In general, the gradient can be regarded as a special version of the Jacobian: it is the Jacobian of a scalar function of several variables.

The Jacobian of the gradient has a special name: the Hessian matrix, which in a sense is the "second derivative" of the scalar function of several variables in question.

Inverse

According to the inverse function theorem, the matrix inverse of the Jacobian matrix of a function is the Jacobian matrix of the inverse function. That is, for some function F : Rn → Rn and a point p in Rn,

It follows that the (scalar) inverse of the Jacobian determinant of a transformation is the Jacobian determinant of the inverse transformation.

Examples

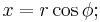

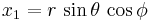

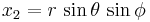

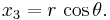

Example 1. The transformation from spherical coordinates (r, θ, φ) to Cartesian coordinates (x1, x2, x3) , is given by the function F : R+ × [0,π) × [0,2π) → R3 with components:

The Jacobian matrix for this coordinate change is

The determinant is r2 sin θ. As an example, since dV = dx1 dx2 dx3 this determinant implies that dV = r2 sin θ dr dθ dφ, where dV is the differential volume element.

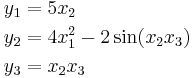

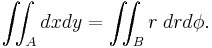

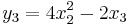

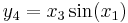

Example 2. The Jacobian matrix of the function F : R3 → R4 with components

is

This example shows that the Jacobian need not be a square matrix.

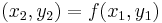

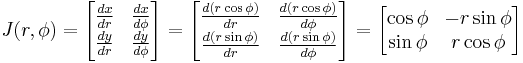

Example 3.

It shows how a Cartesian coordinate system is transformed into a polar coordinate system:

In dynamical systems

Consider a dynamical system of the form x' = F(x), where x' is the (component-wise) time derivative of x, and F : Rn → Rn is continuous and differentiable. If F(x0) = 0, then x0 is a stationary point (also called a fixed point). The behavior of the system near a stationary point is related to the eigenvalues of JF(x0), the Jacobian of F at the stationary point.[1] Specifically, if the eigenvalues all have a negative real part, then the system is stable in the operating point, if any eigenvalue has a positive real part, then the point is unstable.

Jacobian determinant

If m = n, then F is a function from n-space to n-space and the Jacobian matrix is a square matrix. We can then form its determinant, known as the Jacobian determinant. The Jacobian determinant is itself sometimes called "the Jacobian."

The Jacobian determinant at a given point gives important information about the behavior of F near that point. For instance, the continuously differentiable function F is invertible near a point p ∈ Rn if the Jacobian determinant at p is non-zero. This is the inverse function theorem. Furthermore, if the Jacobian determinant at p is positive, then F preserves orientation near p; if it is negative, F reverses orientation. The absolute value of the Jacobian determinant at p gives us the factor by which the function F expands or shrinks volumes near p; this is why it occurs in the general substitution rule.

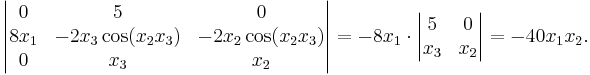

Example

The Jacobian determinant of the function F : R3 → R3 with components

is

From this we see that F reverses orientation near those points where x1 and x2 have the same sign; the function is locally invertible everywhere except near points where x1 = 0 or x2 = 0. Intuitively, if you start with a tiny object around the point (1,1,1) and apply F to that object, you will get an object set with approximately 40 times the volume of the original one.

Uses

The Jacobian determinant is used when making a change of variables when integrating a function over its domain. To accommodate for the change of coordinates the Jacobian determinant arises as a multiplicative factor within the integral. Normally it is required that the change of coordinates is done in a manner which maintains an injectivity between the coordinates that determine the domain. The Jacobian determinant, as a result, is usually well defined.

See also

- Pushforward (differential)

- Hessian matrix

Notes

- ↑ D.K. Arrowsmith and C.M. Place, Dynamical Systems, Section 3.3, Chapman & Hall, London, 1992. ISBN 0-412-39080-9.

External links

- Ian Craw's Undergraduate Teaching Page An easy to understand explanation of Jacobians

- Mathworld A more technical explanation of Jacobians

![J_{F^{-1}}(F(p)) = [ J_F(p) ]^{-1}.\](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/42cf3992fcdbd5f34c37bb9f32452223.png)

![J_F(r,\theta,\phi) =\begin{bmatrix}

\dfrac{\partial x_1}{\partial r} & \dfrac{\partial x_1}{\partial \theta} & \dfrac{\partial x_1}{\partial \phi} \\[3pt]

\dfrac{\partial x_2}{\partial r} & \dfrac{\partial x_2}{\partial \theta} & \dfrac{\partial x_2}{\partial \phi} \\[3pt]

\dfrac{\partial x_3}{\partial r} & \dfrac{\partial x_3}{\partial \theta} & \dfrac{\partial x_3}{\partial \phi} \\

\end{bmatrix}=\begin{bmatrix}

\sin\theta\, \cos\phi & r\, \cos\theta\, \cos\phi & -r\, \sin\theta\, \sin\phi \\

\sin\theta\, \sin\phi & r\, \cos\theta\, \sin\phi & r\, \sin\theta\, \cos\phi \\

\cos\theta & -r\, \sin\theta & 0

\end{bmatrix}.](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/d791528200a6f3c81162837ca9624f39.png)

![J_F(x_1,x_2,x_3) =\begin{bmatrix}

\dfrac{\partial y_1}{\partial x_1} & \dfrac{\partial y_1}{\partial x_2} & \dfrac{\partial y_1}{\partial x_3} \\[3pt]

\dfrac{\partial y_2}{\partial x_1} & \dfrac{\partial y_2}{\partial x_2} & \dfrac{\partial y_2}{\partial x_3} \\[3pt]

\dfrac{\partial y_3}{\partial x_1} & \dfrac{\partial y_3}{\partial x_2} & \dfrac{\partial y_3}{\partial x_3} \\[3pt]

\dfrac{\partial y_4}{\partial x_1} & \dfrac{\partial y_4}{\partial x_2} & \dfrac{\partial y_4}{\partial x_3} \\

\end{bmatrix}=\begin{bmatrix} 1 & 0 & 0 \\ 0 & 0 & 5 \\ 0 & 8x_2 & -2 \\ x_3\cos(x_1) & 0 & \sin(x_1) \end{bmatrix}.](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/b464b96f57cb3228b1c48e61be7f3d8d.png)